KerasでCNNの中間層の可視化(特徴マップ)や重みの可視化まとめ

kerasで中間層の出力を取得

kerasでCNNの中間層を取得する方法は2種類存在する.

ケース1

from keras.models import Model intermediante_layer_model = Model(inputs=model.input, outputs=model.get_layer("fc2").output) y = intermediante_layer_model.predict(X) print(y.shape)

ケース2

from keras import backend as K get_layer_output = K.function([model.layers[0].input],[model.layers[21].output]) y = get_layer_output([X,0])[0] print(y.shape)

中間層の重みを可視化

中間層の重みを可視化するためには上記の中間層の取得のときに使ったコードを利用する.

weights = resnet.get_layer("conv1").get_weights()[0]

from keras.applications import ResNet50 import matplotlib.pyplot as plt import numpy as np from PIL import Image <200b> resnet = ResNet50() resnet.summary() <200b> weights = resnet.get_layer("conv1").get_weights()[0] <200b> <200b> weights.shape print("shape",weights.shape) <200b> w = weights[:, :, ::-1, 0].copy() m = w.min() M = w.max() w = (w-m)/(M-m) plt.imshow(w) <200b> result = Image.new("RGB", (7*8+(8-1), 7*8+(8-1))) for i in range(64): w= weights[:, :, ::-1, i].copy() M = w.max() m = w.min() w = (w-m)/(M-m) w *= 255 img = Image.fromarray(w.astype("uint8"), mode="RGB") result.paste(img, (7*(i//8) + (i//8), 7*(i%8)+(i%8))) plt.imshow(result)

激安ラジコン(RC)の自動運転化計画※RCをEV3に変更しました

目的:ラジコンの自動運転をすること

使ったもの

ハード

システムの概要

今回用いたコースはこちら

今回は言語をpython限定.

行動の分類を線の数を考慮したクラス分類問題とした

画像転送部分(動画の配信)

- MJPG-streamerを使ってwebカメラから取得した画像をストリーミングを行う.

*設定

fps:5

width:640

height:480

*コマンド

./mjpg_streamer -i "./input_uvc.so -f 10 -r 320x240 -d /dev/video0 -y -n" -o "./output_http.so -w ./www -p 8080"

モータ制御部分

- EV3の二つのモータの制御を行う.サーバ(PC)側の分類結果からそれに対応する制御信号をMQTTにより受信し,モータの駆動させる. *プログラム

学習・検証部分

- KerasによりCNN部分の実装を行う. *プログラム

#coding:utf-8 import os from keras.applications.vgg16 import VGG16 from keras.preprocessing.image import ImageDataGenerator from keras.models import Sequential, Model from keras.layers import Input, Activation, Dropout, Flatten, Dense from keras.preprocessing.image import ImageDataGenerator from keras import optimizers import numpy as np classes = ['foward_1', 'foward_2', 'left_1', 'left_2', 'right_1', 'right_2', 'other'] batch_size = 32 nb_classes = len(classes) img_rows, img_cols = 150, 150 channels = 3 train_data_dir = 'data/train' validation_data_dir = 'data/test' nb_train_samples = 1699 nb_val_samples = 447 nb_epoch = 100 result_dir = 'results' if not os.path.exists(result_dir): os.mkdir(result_dir) if __name__ == '__main__': # VGG16モデルと学習済み重みをロード # Fully-connected層(FC)はいらないのでinclude_top=False) input_tensor = Input(shape=(img_rows, img_cols, 3)) vgg16 = VGG16(include_top=False, weights='imagenet', input_tensor=input_tensor) # vgg16.summary() # FC層を構築 # Flattenへの入力指定はバッチ数を除く top_model = Sequential() top_model.add(Flatten(input_shape=vgg16.output_shape[1:])) top_model.add(Dense(256, activation='relu')) top_model.add(Dropout(0.5)) top_model.add(Dense(nb_classes, activation='softmax')) # 学習済みのFC層の重みをロード # top_model.load_weights(os.path.join(result_dir, 'bottleneck_fc_model.h5')) # VGG16とFCを接続 model = Model(input=vgg16.input, output=top_model(vgg16.output)) # 最後のconv層の直前までの層をfreeze for layer in model.layers[:15]: layer.trainable = False # Fine-tuningのときはSGDの方がよい? model.compile(loss='categorical_crossentropy', optimizer=optimizers.SGD(lr=1e-4, momentum=0.9), metrics=['accuracy']) # train_datagen = ImageDataGenerator(featurewise_center=False, # samplewise_center=False, # featurewise_std_normalization=False, # samplewise_std_normalization=False, # zca_whitening=False, # rotation_range=0.2, # width_shift_range=0.2, # height_shift_range=0.2, # shear_range=0.2, # zoom_range=0.2, # channel_shift_range=0.1, # fill_mode='nearest', # cval=0., # horizontal_flip=True, # vertical_flip=True, # rescale=None) train_datagen = ImageDataGenerator(featurewise_center=False, samplewise_center=False, featurewise_std_normalization=False, samplewise_std_normalization=False, zca_whitening=False, rotation_range=0.1, width_shift_range=0.2, height_shift_range=0.2, shear_range=0.2, zoom_range=0.2, channel_shift_range=0.1, fill_mode='nearest', rescale=None) test_datagen = ImageDataGenerator() train_generator = train_datagen.flow_from_directory( train_data_dir, target_size=(img_rows, img_cols), color_mode='rgb', classes=classes, class_mode='categorical', batch_size=batch_size, shuffle=True) validation_generator = test_datagen.flow_from_directory( validation_data_dir, target_size=(img_rows, img_cols), color_mode='rgb', classes=classes, class_mode='categorical', batch_size=batch_size, shuffle=True) # Fine-tuning history = model.fit_generator( train_generator, samples_per_epoch=nb_train_samples, nb_epoch=nb_epoch, validation_data=validation_generator, nb_val_samples=nb_val_samples) model.save_weights(os.path.join(result_dir, '20180802.h5')) save_history(history, os.path.join(result_dir, '20180802.txt'))

- 使用したCNNモデル:

走行テスト部分

*ラズベリーパイ3

webカメラから動画のストリーミングを行う.

*サーバ

ストリーミングされた動画をキャプチャし,その画像を学習済モデルへ入力し分類を行う.その分類結果をMQTTを用いてモータの制御信号を送信する.

#!/usr/bin/env python#!/usr/bin/env python#coding:utf-8import cv2import matplotlib.pyplot as pltfrom IPython import display import numpy as npfrom io import BytesIOfrom PIL import Imagefrom PIL import ImageOps from keras.applications.vgg16 import VGG16, preprocess_input, decode_predictionsfrom keras.preprocessing import imageimport time import socketimport numpy as npimport cv2#for predictionimport osimport sysfrom keras.applications.vgg16 import VGG16from keras.models import Sequential, Modelfrom keras.layers import Input, Activation, Dropout, Flatten, Densefrom keras.preprocessing import image import paho.mqtt.client as mqttimport threading# Load VGG16#model = VGG16(weights='imagenet')result_dir = 'results'classes = ['foward_1', 'foward_2', 'left_1', 'left_2', 'right_1', 'right_2', 'other'] nb_classes = len(classes) img_height, img_width = 150, 150channels = 3 pre_count = [] # VGG16input_tensor = Input(shape=(img_height, img_width, channels))vgg16 = VGG16(include_top=False, weights='imagenet', input_tensor=input_tensor) # FCfc = Sequential()fc.add(Flatten(input_shape=vgg16.output_shape[1:]))fc.add(Dense(256, activation='relu'))fc.add(Dropout(0.5))fc.add(Dense(nb_classes, activation='softmax')) # VGG16とFCを接続model = Model(input=vgg16.input, output=fc(vgg16.output)) # 学習済みの重みをロードmodel.load_weights(os.path.join(result_dir, '20180803_3.h5')) model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) URL = "http://192.168.0.4:8080/?action=stream"vc = cv2.VideoCapture(URL)#/////////////////////////////////////////////////////////// def cap (frame, client): frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB) # makes the blues image look real colored #webcam_preview.set_data(frame) plt.draw() # display.clear_output(wait=True) # display.display(plt.gcf()) plt.pause(0.01) img = Image.fromarray(np.uint8(frame)) img = img.resize((150, 150)) x = image.img_to_array(img) pred_data = np.expand_dims(x, axis=0) #print("show") plt.imshow(img) #plt.show() # print("[------------------------ PREDICT ------------------------]\n") # preds = model.predict(preprocess_input(pred_data)) # #time.sleep(1) # #print(preds) # results = decode_predictions(preds, top=1)[0] # for result in results: # #print(result) # if results[0] == "[n03814639]" or "[n03483316]": # print("s") # print("[{}] {:<30} {}%".format(result[0c], result[1],round(result[2]*100, 2))) pred = model.predict(pred_data)[0] #print(type(int(pred.argsort()[-1:][::-1]))) #from pynput.keyboard import Key, Listener #print("pre_count",len(pre_count)) # host = '192.168.0.18' # port = 1883 # keepalive = 60 # topic = 'topic/moter/dt' # client = mqtt.Client() # client.connect(host, port, keepalive) if int(pred.argsort()[-1:][::-1]) == 0: print('foward_1') client.publish(topic, str(310) + "," + str(300)) #time.sleep(0.2) #client.publish(topic, str(0) + "," + str(0)) elif int(pred.argsort()[-1:][::-1]) == 1: print('foward_2') client.publish(topic, str(410) + "," + str(400)) #time.sleep(0.2) #client.publish(topic, str(0) + "," + str(0)) elif int(pred.argsort()[-1:][::-1]) == 2: print('left_1') client.publish(topic, str(500) + "," + str(200)) #time.sleep(0.2) # client.publish(topic, str(40) + "," + str(50)) # time.sleep(0.7) # client.publish(topic, str(60) + "," + str(25)) # time.sleep(0.3) #client.publish(topic, str(0) + "," + str(0)) elif int(pred.argsort()[-1:][::-1]) == 3: print('left_2') client.publish(topic, str(300) + "," + str(200)) #time.sleep(0.2) # client.publish(topic, str(40) + "," + str(50)) # time.sleep(0.7) # client.publish(topic, str(60) + "," + str(25)) # time.sleep(0.3) #client.publish(topic, str(0) + "," + str(0)) elif int(pred.argsort()[-1:][::-1]) == 4: print('right_1') client.publish(topic, str(200) + "," + str(500)) #time.sleep(0.2) # client.publish(topic, str(50) + "," + str(40)) # time.sleep(0.7) # client.publish(topic, str(25) + "," + str(60)) # time.sleep(0.3) #client.publish(topic, str(0) + "," + str(0)) elif int(pred.argsort()[-1:][::-1]) == 5: print('right_2') client.publish(topic, str(200) + "," + str(300)) #time.sleep(0.2) # client.publish(topic, str(50) + "," + str(40)) # time.sleep(0.7) # client.publish(topic, str(25) + "," + str(60)) # time.sleep(0.3) #client.publish(topic, str(0) + "," + str(0)) elif int(pred.argsort()[-1:][::-1]) == 6: print('other') client.publish(topic, str(-200) + "," + str(300)) #予測確率が高いトップ5を出力 top = 3 top_indices = pred.argsort()[-top:][::-1] result = [(classes[i], pred[i]) for i in top_indices] print(result) print("") #///////////////////////////////////////////////////////# while True:# ret, img = vc.read()# cv2.imshow("Stream Video",img)# print(img.shape) # Capture webcamera photo# vc = cv2.VideoCapture(0) host = '192.168.0.17'port = 1883keepalive = 60topic = 'topic/motor/dt'client = mqtt.Client()client.connect(host, port, keepalive) if vc.isOpened(): # try to get the first frame is_capturing, frame = vc.read() #cv2.imshow("Stream Video",frame) #frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB) # makes the blues image look real colored #webcam_preview = plt.imshow(frame) else: is_capturing = False # Push ■ Button!!while is_capturing: try: # Lookout for a keyboardInterrupt to stop the script #print("pre") # time.sleep(3) is_capturing, frame = vc.read() pre_count.append(1) if int(len(pre_count)) % 5 == 0: # thread = threading.Thread(target=cap, args=(frame,)) # thread.start() cap(frame, client) # frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB) # makes the blues image look real colored # #webcam_preview.set_data(frame) # plt.draw() # # display.clear_output(wait=True) # # display.display(plt.gcf()) # #plt.pause(0.01) # img = Image.fromarray(np.uint8(frame)) # img = img.resize((150, 150)) # x = image.img_to_array(img) # pred_data = np.expand_dims(x, axis=0) # print("show") # plt.imshow(img) # # plt.show() # # print("[------------------------ PREDICT ------------------------]\n") # # preds = model.predict(preprocess_input(pred_data)) # # #time.sleep(1) # # #print(preds) # # results = decode_predictions(preds, top=1)[0] # # for result in results: # # #print(result) # # if results[0] == "[n03814639]" or "[n03483316]": # # print("s") # # print("[{}] {:<30} {}%".format(result[0c], result[1],round(result[2]*100, 2))) # pred = model.predict(pred_data)[0] # #print(type(int(pred.argsort()[-1:][::-1]))) # #from pynput.keyboard import Key, Listener # print("pre_count",len(pre_count)) # # host = '192.168.0.18' # # port = 1883 # # keepalive = 60 # # topic = 'topic/moter/dt' # # client = mqtt.Client() # # client.connect(host, port, keepalive) # if int(pred.argsort()[-1:][::-1]) == 0: # print('foward_1') # host = '192.168.0.17' # port = 1883 # keepalive = 60 # topic = 'topic/motor/dt' # client = mqtt.Client() # client.connect(host, port, keepalive) # client.publish(topic, str(200) + "," + str(200)) # #time.sleep(0.2) # #client.publish(topic, str(0) + "," + str(0)) # elif int(pred.argsort()[-1:][::-1]) == 1: # print('foward_2') # host = '192.168.0.17' # port = 1883 # keepalive = 60 # topic = 'topic/motor/dt' # client = mqtt.Client() # client.connect(host, port, keepalive) # client.publish(topic, str(200) + "," + str(200)) # #time.sleep(0.2) # #client.publish(topic, str(0) + "," + str(0)) # elif int(pred.argsort()[-1:][::-1]) == 2: # print('left_1') # host = '192.168.0.17' # port = 1883 # keepalive = 60 # topic = 'topic/motor/dt' # client = mqtt.Client() # client.connect(host, port, keepalive) # client.publish(topic, str(300) + "," + str(100)) # #time.sleep(0.2) # # client.publish(topic, str(40) + "," + str(50)) # # time.sleep(0.7) # # client.publish(topic, str(60) + "," + str(25)) # # time.sleep(0.3) # #client.publish(topic, str(0) + "," + str(0)) # elif int(pred.argsort()[-1:][::-1]) == 3: # print('left_2') # host = '192.168.0.17' # port = 1883 # keepalive = 60 # topic = 'topic/motor/dt' # client = mqtt.Client() # client.connect(host, port, keepalive) # client.publish(topic, str(200) + "," + str(50)) # #time.sleep(0.2) # # client.publish(topic, str(40) + "," + str(50)) # # time.sleep(0.7) # # client.publish(topic, str(60) + "," + str(25)) # # time.sleep(0.3) # #client.publish(topic, str(0) + "," + str(0)) # elif int(pred.argsort()[-1:][::-1]) == 4: # print('right_1') # host = '192.168.0.17' # port = 1883 # keepalive = 60 # topic = 'topic/motor/dt' # client = mqtt.Client() # client.connect(host, port, keepalive) # client.publish(topic, str(100) + "," + str(300)) # #time.sleep(0.2) # # client.publish(topic, str(50) + "," + str(40)) # # time.sleep(0.7) # # client.publish(topic, str(25) + "," + str(60)) # # time.sleep(0.3) # #client.publish(topic, str(0) + "," + str(0)) # elif int(pred.argsort()[-1:][::-1]) == 5: # print('right_2') # host = '192.168.0.17' # port = 1883 # keepalive = 60 # topic = 'topic/motor/dt' # client = mqtt.Client() # client.connect(host, port, keepalive) # client.publish(topic, str(50) + "," + str(200)) # #time.sleep(0.2) # # client.publish(topic, str(50) + "," + str(40)) # # time.sleep(0.7) # # client.publish(topic, str(25) + "," + str(60)) # # time.sleep(0.3) # #client.publish(topic, str(0) + "," + str(0)) # elif int(pred.argsort()[-1:][::-1]) == 6: # print('other') # host = '192.168.0.17' # port = 1883 # keepalive = 60 # topic = 'topic/motor/dt' # client = mqtt.Client() # client.connect(host, port, keepalive) # client.publish(topic, str(200) + "," + str(50)) #import paho.mqtt.client as mqtt #from pynput.keyboard import Key, Listener # host = '192.168.0.7' # port = 1883 # keepalive = 60 # topic = 'mqtt/test' # client = mqtt.Client() # client.connect(host, port, keepalive) # if classes[i] == 'foward': # print('foward') # #client.publish(topic, str(0.5) + "," + str(0) + "," + str(0) + "," + str(0)) # elif classes[i] == 'right': # print('right') # #client.publish(topic, str(0.5) + "," + str(0) + "," + str(0) + "," + str(0)) # elif classes[i] == 'left': # print('left') #client.publish(topic, str(0.5) + "," + str(0) + "," + str(0) + "," + str(0)) #client.publish(topic, str(0.5) + "," + str(0) + "," + str(0) + "," + str(0)) # the pause time is = 1 / frameratef except KeyboardInterrupt: vc.release() is_capturing = False

*EV3

受信した制御信号をモータへ反映し,モータを駆動させ制御を行う.

# !/usr/bin/env python3 import paho.mqtt.client as mqtt from ev3dev.auto import * count = [] ma = Motor('outA') md = Motor('outD') def on_connect(client, userdata, flags, rc): print("Connected with result code " + str(rc)) client.subscribe("topic/motor/dt") def on_message(client, userdata, msg): msg_str = msg.payload.decode("utf-8") msg_array = msg_str.split(",") ma.speed_sp = msg_array[0] md.speed_sp = msg_array[1] count.append(1) print(len(count)) print(msg_array) #ma.duty_cycle_sp = msg_array[0] #time.sleep(3) #ma.stop() #md.duty_cycle_sp = msg_array[1] # time.sleep(3) #ma.stop() ma.run_timed(time_sp=250,speed_sp=ma.speed_sp,stop_action='brake') md.run_timed(time_sp=250,speed_sp=md.speed_sp,stop_action='brake') #time.sleep(1) client = mqtt.Client() client.connect("192.168.0.17" ,1883 ,60) client.on_connect = on_connect client.on_message = on_message ma.run_direct() md.run_direct() ma.duty_cycle_sp = 0 md.duty_cycle_sp = 0 client.loop_forever()

実際の走行動画

結果・考察

激安ラジコン(RC)の自動運転化計画※プログラムは6/25掲載予定

目的:総計1万円でラジコンの自動運転化をすること

使ったもの

ハード

システムの概要

今回は言語をpython限定にした.

画像転送部分

モータ制御部分

- L298nによりモータの制御を行う.サーバ(PC)側の分類結果からそれに対応する制御信号をMQTTにより受信し,モータの駆動させる. *プログラム

学習・検証部分

- KerasによりCNN部分の実装を行う. *プログラム

- 使用したCNNモデル:vgg16(転移学習)

結果・考察

全体として1万円以内に収めることができた. しかし、画像の転送部分での課題があり,Picamera及びffmpeg等でストリーミングを行うことで解決する予定である.

L298Nを使ってモータの制御

L298Nとは

2つのモータを独立して駆動でき、正転逆転制御などに最適です。

制御は各モーターに対して、イネーブル(回転する/しない)と回転方向の指定を2線でおこないます。

マイコンでの制御のほか、スイッチなどによってマニュアル制御も簡単におこなえます。

L298N使用 2Aデュアルモーターコントローラー: マイコン関連 秋月電子通商 電子部品 ネット通販

http://akizukidenshi.com/catalog/g/gM-06680/

Pin Description

ENA Motor A Speed Control

IN1 Motor A Direction Pin 1

IN2 Motor A Direction Pin 2

IN3 Motor B Direction Pin 1

IN4 Motor B Direction Pin 2

ENB Motor B Speed Control

Terminal Description

VMS 5-35V Input

GND 0V

5V 5V input/output

OUT1 Motor A

OUT2 Motor A

OUT3 Motor B

OUT4 Motor B

RaspiとPythonによるモータ制御

import RPi.GPIO as gpio import time def init(): gpio.setmode(gpio.BCM) gpio.setup(17, gpio.OUT) gpio.setup(22, gpio.OUT) gpio.setup(23, gpio.OUT) gpio.setup(24, gpio.OUT) def forward(tf): init() gpio.output(17, True) gpio.output(22, False) gpio.output(23, True) gpio.output(24, False) time.sleep(tf) gpio.cleanup() def reverse(tf): init() gpio.output(17, False) gpio.output(22, True) gpio.output(23, False) gpio.output(24, True) time.sleep(tf) gpio.cleanup() print "forward" forward(4) print "backward" reverse(2)

#!/usr/bin/python3.4 #MKerbachi November 6th, 2015 #Python code to control two motors with Rpi A+ with the H bridge l298n import RPi.GPIO as GPIO # always needed with RPi.GPIO import time import curses # get the curses screen window screen = curses.initscr() # turn off input echoing curses.noecho() # respond to keys immediately (don't wait for enter) curses.cbreak() # map arrow keys to special values screen.keypad(True) #If the two GND (PI + l298n) are not interconnected that won't work ! #For all Keyboard symbols: #https://docs.python.org/2/library/curses.html GPIO.setmode(GPIO.BCM) # choose BCM or BOARD numbering schemes. I use BCM ################################################################# # Variables # ################################################################# #For Motor #1 GPIO.setup(18, GPIO.OUT)# set GPIO 01 as an output Enabler GPIO.setup(24, GPIO.OUT)# set GPIO 05 as an output. GPIO.setup(23, GPIO.OUT)# set GPIO 04 as an output. p24 = GPIO.PWM(24, 100) p23 = GPIO.PWM(23, 100) p18 = GPIO.PWM(18, 100) # create an object p for PWM on port 18 at 50 Hertz # you can have more than one of these, but they need # different names for each port # e.g. p1, p2, motor, servo1 etc. #For Motor #2 GPIO.setup(13, GPIO.OUT)# set GPIO 03 as an output Enabler GPIO.setup(27, GPIO.OUT)# set GPIO 02 as an output. GPIO.setup(17, GPIO.OUT)# set GPIO 0 as an output. p27 = GPIO.PWM(27, 100) p17 = GPIO.PWM(17, 100) p13 = GPIO.PWM(13, 100) # create an object p for PWM on port 18 at 50 Hertz # you can have more than one of these, but they need # different names for each port # e.g. p1, p2, motor, servo1 etc. LastKey = "" ################################################################# # Functions # ################################################################# def Stop(): p18.start(0) p23.start(0) p24.start(0) p13.start(0) p27.start(0) p17.start(0) time.sleep(0.3) #GPIO.cleanup() print ("Stop executed") #exit() def Left(): if LastKey != 'left' : Stop() p18.start(60) p23.start(0) p24.start(100) time.sleep(0.4) p13.start(60) p27.start(0) p17.start(100) # time.sleep(0.3) #Stop() def Right(): if LastKey != 'right' : Stop() p18.start(60) p23.start(100) p24.start(0) time.sleep(0.4) p13.start(60) p27.start(100) p17.start(0) # time.sleep(0.3) #Stop() def Up(): #if LastKey != 'up' : Stop() p18.start(60) p23.start(100) p24.start(0) time.sleep(0.3) p13.start(60) p27.start(0) p17.start(100) time.sleep(0.3) #Stop() def Down(): #if LastKey != 'down' : Stop() p18.start(60) p23.start(0) p24.start(100) time.sleep(0.3) p13.start(60) p27.start(100) p17.start(0) time.sleep(0.3) #Stop() try: while True: char = screen.getch() print ('you entred') print (char) if char == ord('q'): break #elif char == curses.KEY_ENTER: elif char == ord(' '): # print doesn't work with curses, use addstr instead #screen.addstr(0, 0, 'right') if not ( LastKey == "enter" ) : print ('Last key was not Enter, it was %s \n' % LastKey) LastKey="enter" print ('enter\n') Stop() elif char == curses.KEY_RIGHT: # print doesn't work with curses, use addstr instead #screen.addstr(0, 0, 'right') if not ( LastKey == "right" ) : print ('Last key was not right, it was %s \n' % LastKey) LastKey="right" print ('right\n') Right() elif char == curses.KEY_LEFT: #screen.addstr(0, 0, 'left ') if not ( LastKey == "left" ) : print ('Last key was not left, it was %s \n' % LastKey) LastKey="left" print ('left\n') Left() elif char == curses.KEY_UP: #screen.addstr(0, 0, 'up ') if not ( LastKey == "up" ) : print ('Last key was not up, it was %s \n' % LastKey) LastKey="up" print ('up\n') Up() elif char == curses.KEY_DOWN: #screen.addstr(0, 0, 'down ') if not ( LastKey == "down" ) : print ('Last key was not down t was %s \n' % LastKey) LastKey="down" print ('down\n') Down() else: print ('Nothing Entred!\n') finally: # shut down cleanly print ('In the finally section now') curses.nocbreak(); screen.keypad(0); curses.echo() curses.endwin() p13.stop() # stop the PWM output p17.stop() p27.stop() p23.stop() # stop the PWM output p24.stop() p18.stop() GPIO.cleanup() # when your program exits, tidy up after yours p13.stop() # stop the PWM output p17.stop() p27.stop() p23.stop() # stop the PWM output p24.stop() p18.stop() GPIO.cleanup() # when your program exits, tidy up after yours

メモ

アナログ出力では周波数とデューティ比を指定して、モータ制御などに使うPWM制御ができます。

まずピンに対して周波数を設定してpwmオブジェクトを取得します。

pwm = GPIO.PWM([チャンネル], [周波数(Hz)]) 次にpwmオブジェクトに対してデューティ比を指定して出力を開始します。

pwm.start([デューティ比]) 例えば、ピン18に周波数1KHz、デューティ比50%でPWM出力する場合は以下のように書きます。

pwm = GPIO.PWM(18, 1000) pwm.start(50) 途中で周波数を変更する場合は以下の関数を使用します。

pwm.ChangeFrequency([周波数(Hz)]) 途中でデューティ比を変更する場合は以下の関数を使用します。

pwm.ChangeDutyCycle([デューティ比]) PWM出力を停止する場合は以下の関数を実行します。

pwm.stop() スクリプト終了時にはちゃんと停止しておきましょう。

Intersection-over-Union(IoU)とは

Intersection-over-Union(IoU)とは

物体認識の分野で領域の一致具合を評価する手法である.

predicted bound box とground truth boxを合わせた領域bが, 目的となる領域g(ground truth box)がどれだけ含まれているかとなる.

IoU(b,g)=area(b∩g)/area(b∪g)

request python まとめ

what is request

requestsとはサードパーティ製のhttp通信を行うためのライブラリ これを使用すると、webサイトのデータのダウンロードやrestapiの使用が可能 install cmd pip install requests

example

ヤフーのニュース一覧ページのhtmlを取得 import requests url = "https://news.yahoo.co.jp/topics" r = requests.get(url) print(r.text)

urlから画像ダウンロード

import urllib.error import urllib.request headers = { "User-Agent": "Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:47.0) Gecko/20100101 Firefox/47.0", }

def download_image(url, dst_path, headers): try: # request = urllib.request.Request(url=url, headers=headers) # data = urllib.request.urlopen(request)

data = urllib.request.urlopen(url,headers).read()

with open(dst_path, mode="wb") as f:

f.write(data)

except urllib.error.URLError as e:

print(e)

url = 'URL' dst_path = 'lena_square.png'

dst_dir = 'data/src'

dst_path = os.path.join(dst_dir, os.path.basename(url))

download_image(url, dst_path, headers)

urlからhtmlコンテンツダウンロード

coding:utf-8

import urllib.request

url = "URL" headers = { "User-Agent": "Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:47.0) Gecko/20100101 Firefox/47.0", }

request = urllib.request.Request(url=url, headers=headers) response = urllib.request.urlopen(request) html = response.read().decode('utf-8') print(html)

参考

http://www.python.ambitious-engineer.com/archives/974#requests

imgaugライブラリを使った機械学習用のdata augmentation

install

通常版

sudo pip install imgaug

最新版

pip install git+https://github.com/aleju/imgaug

必要なもの

- six

- numpy

- scipy

- scikit-image (pip install -U scikit-image)

- OpenCV (i.e. cv2)

使い方

すべてのDA手法をお試しするならgenerate_example_images.pyを実行すればよし

DAの種類

kerasで実装できないものをまとめてみました。

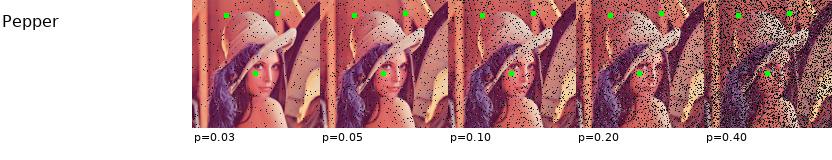

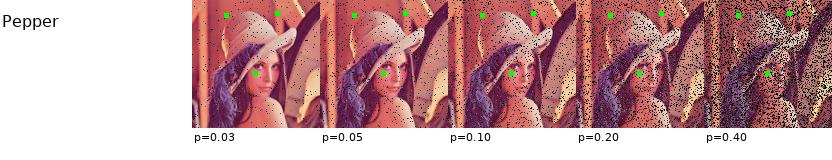

ペッパー

ガウシアンノイズ

ソルト

ペッパー

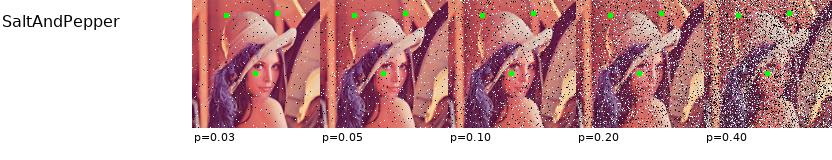

ソルト&ペッパー

piece wise affine(区分積分アフィン?)

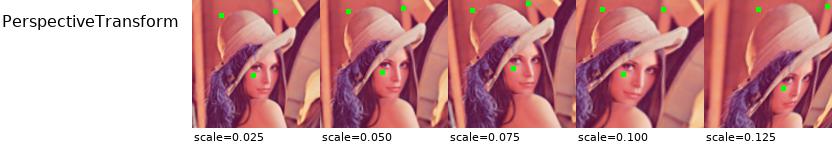

透視変換(perspective transform)

crop(トリミング)

平滑化フィルタ

median blur

gaussian blur

bilateral blur

averageblur

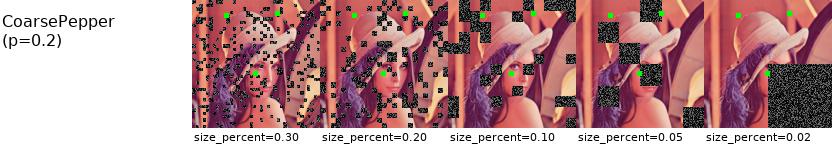

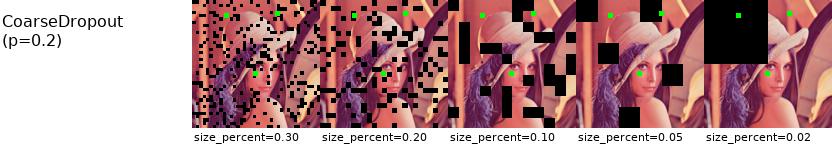

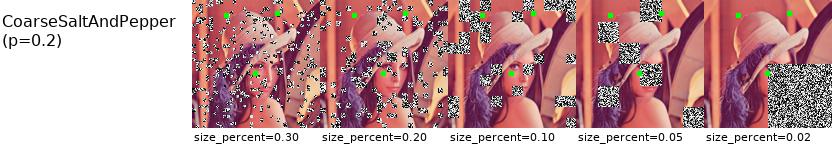

coarseシリーズ

coarse ソルト

coarse ペッパー

coarse Dropout

coarse ソルト&ペッパー

contrast normalization

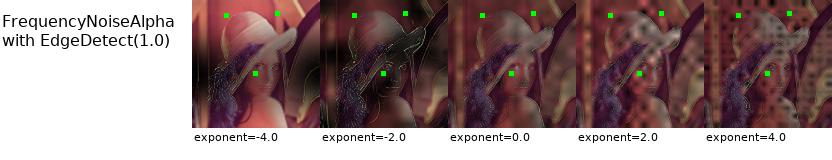

frequency noisealpha

multiply (ピクセル演算)